Hiroaki Teraoka

July 22th, 2022.

This is a reproduction of the PDF file I published on this blog on July 22th, 2022. The PDF file was difficult to read if you do not download it. Thus, I reproduce the contents of the PDF file for readers who do not want to download the file. I had to change some minor parts because underlines and bar notations are unavailable on Word Press.

1. Introduction—Before generative grammar.

In 1963, Joseph H. Greenberg announced that human languages had universal common features. Greenberg meant that all human languages followed some common rules. To find out these common rules, Greenberg selected 30 languages almost at random and carefully examined these sample languages. Greenberg identified subjects (S), objects (O) and verbs (V) as fundamental elements of sentences. A simple calculation tells you that you can arrange these 3 elements (viz. S, V and O) in 6 different orders. However, he only found languages with SVO, SOV and VSO word orders in his sample languages. This finding led Greenberg to conclude that languages in which objects precede subjects are extremely rare or do not exist. Then, he classified attested languages into two categories, namely, languages with VO type word orders and those with OV type word orders. According to Greenberg, although VO type languages tended to have preposition constructions, OV type languages tended to have postpositions. Greenberg also found out that only in VO type languages, a question particle was placed at the initial position of a clause. In contrast to that, in OV languages, a question particle was placed at the final position of a clause if a sentence had a question particle at all.

Here we check examples of OV type and VO type languages. Japanese has OV type word orders and English has VO type word orders. We examine yes-no question clause in both Japanese and English.

(1) (a) I do not know [whether he is guilty]

(b) I do not know [if he is guilty]

(c) Is he guilty?

What Greenberg called ‘particles’ remains a mystery. Usually, particles do not change their word forms for agreement or case marking. Typically, particles lack rich semantic meanings but have grammatical functions. In (1ab), whether and if can be said to be yes-no question particles. Bracketed clauses in (1ab) are called embedded clauses. English, which has VO word orders, places question particles at the initial position of embedded clauses. (1c) indicates that present-day English seems to lack yes-no question particles for main clauses.

In contrast to the English language, Japanese has OV word orders. Yes-no question clauses in Japanese are as follows:

(2) Japanese

(a) kare-wa kashikoi-no ka

he-TOP clever Question

‘Is he clever?’

(b) [kare-ga kashikoi-no ka ] wakara nai

he-NOM clever Question know not

‘I do not know whether he is clever.’

Each ka in (2ab) can be said to be a yes-no question particle. In both embedded clauses and main clauses, yes-no question particles are placed at the ends of the clauses.

Thus far, we have seen some of the Greenberg’s universals of human languages. We have also checked the validities of these universals. You may wonder why human languages have such universals. Unfortunately, Greenberg did not explain why human language have such common rules. He claimed that VO languages went well with preposition constructions and clause initial question particles. His claim does not explain anything. The task of explaining mechanisms behind these universals of human languages has been left to other linguists.

2 Generative grammar’s approach to sentences.

Linguistics have several branches. One of these branches is generative grammar. Noam Chomsky started generative grammar by writing a thesis titled The Logical Structure of Linguistic Theory in 1955. Ever since generative grammarians have studied how we generate phrases and sentences out of lexicon (i.e. words). Generative grammarians believe in an innate hypothesis. In the innate hypothesis, generative grammarians claim that we have the source of language inside our brains. Chomsky (1995) calls this source of language Universal Grammar (UG). When we set parameters of Universal Grammar, the UG grows into a full language. This means that all naturally developed human language follow some common rules because they have all developed from UG. (Here, “naturally developed languages” mean languages which have their native speakers. For example, computer programming language is not a naturally developed language.) Generative grammarians have studied many languages to shed light on the true nature of UG. By doing so, generative grammarians have explained some of Greenberg’s universals as a by-product. In the following section, we see how generative grammarians analyze sentences.

According to Chomsky (1995) we need a process called merge to create phrases and sentences. Merge combines one constituent (such as a word and a phrase) with another to give us a larger constituent. For example, when we merge the determiner the and the NP (Noun Phrase) book, we get a larger constituent (i.e. a phrase) the book. A question arises here: what is the overall grammatical feature of the resulting phrase [the book]? Semantically, the phrase seems to have the grammatical feature of a noun. However, we concern here the syntactic feature of the phrase. We cannot put the phrase [the book] in positions where NPs usually can be placed.

(3) (a) I want a [ ].

(b) This [ ] is interesting.

Bracketed places in (3) are places where NPs can be placed. Both follows determiners. We cannot put the phrase the book in the bracketed spaces. This means that once a determiner is merged with a NP, the resulting phrase loses the grammatical feature of the NP and gains that of the determiner (D). Thus, the resulting phrase is categorized as a DP (determiner phrase).

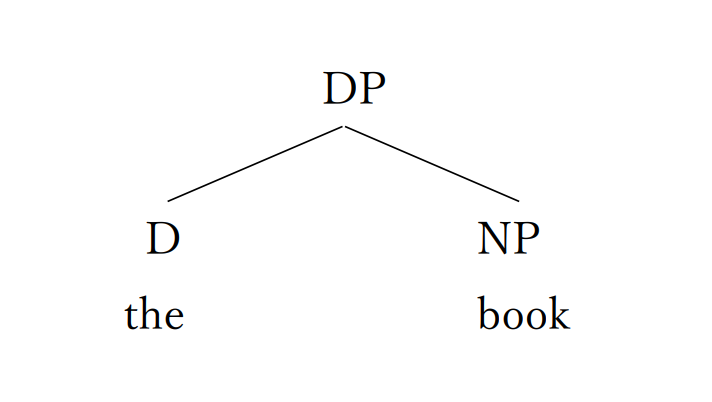

(4) The internal structure of the DP the book

The tree diagram in (4) shows the result of our first merge. This tree diagram shows that the resulting phrase [the book] is a DP and the D the determines the grammatical feature of the overall phrase. The constituent that determines the overall grammatical feature of the resulting phrase is called the head of the phrase. Thus, the D (determiner) the is the head of the DP. The NP book does not determine the grammatical feature of the DP. Linguists call such a constituent the complement of the head (or the complement of the phrase). Thus, the NP book is the complement of the head D the. A head and a complement are merged to form a phrase.

Another important point is that a head selects its complement (Donati and Cecchetto 2011). For example, the head D the requires a NP as its complement. For example, if you try to merge the head D the with a V (verb) do as the D’s complement, the resulting phrase [*the do] is ungrammatical. (* indicates that the phrase or the sentence is ungrammatical.) Hence, the validity of the tests in (3).

We merge the DP the book and a V read to form a larger phrase read the book. This time, the DP is treated as a single constituent. The resulting phrase read the book seems to have the behavior of a larger verb. For example, the phrase read the book can be placed in bracketed positions in (5a-c).

(5) (a) He will [ ].

(b) He can [ ].

(c) He wants to [ ].

We can place verbs such as swim and jump in bracketed space in(5a-c). We can also place the resulting phrase read the book in the same places. Thus, the bracketed position in (5 a-c) are places where VPs (Verb Phrases) can be placed. The fact that the resulting phrase read the book can be placed in (5a-c) means that the phrase has the grammatical features of a verb phrase. We can draw a tree diagram (6) to show the internal structure of the VP read the book.

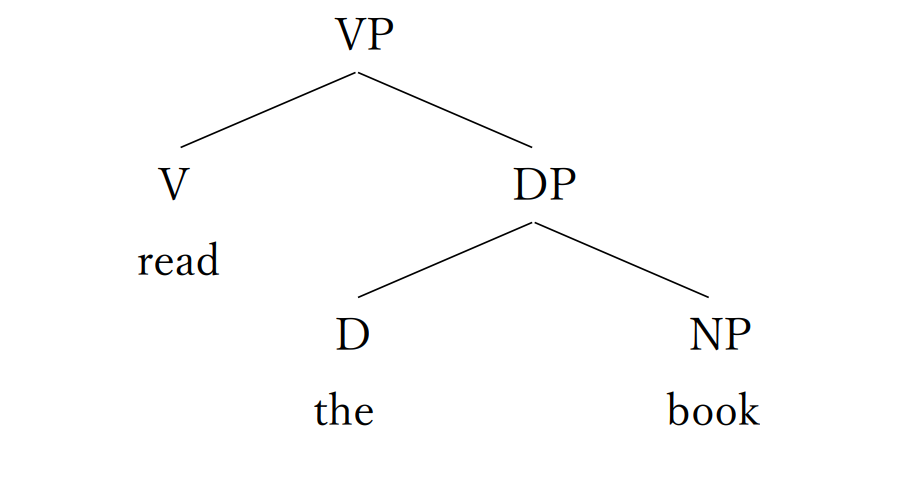

(6) The internal structure of the VP read the book

The V read determines the grammatical feature of the VP read the book. Thus, the V read is the head of the VP. The DP the book is the complement of the VP.

Careful readers might have noticed that the head precedes its complement in every merge operation. In the first merge operation, the head D precedes its complement NP. In the second merge operation, the head V precedes its complement DP. These word orders are not by chance. Only two kinds of word order are possible for a head and its complement. We place the head and its complement so that the head precedes its complement or we place them so that the head follows its complement. This word order is governed by the value of the head-initial parameter (Chomsky 1995, Radford 2004, 2009, 2016, Roberts 2021 among many others). Generative grammarians believe that human languages have parameters. No linguists know the exact number of parameters a human language has. Roberts (2007) counted the number of parameters linguists had already discovered and concluded that a human language had at least 30 parameters. However, recent study reveals that a human language has far more parameters (Roberts 2021). Roberts (2007, 2021) claims that the head-initial parameter, which governs the word order of a head and its complement, is one of the most fundamental parameters (a big parameter in his terminology). Parameters are like switches in your brains. Radford (2004, 2009, 2016) claims that if your brain has set the value of the head-initial parameter as positive, heads precede their complements in all phrases you produce. If you have set the value of the head-initial parameter as negative, the head follows its complement in every phrase you utter. The parameters are first thought up to explain children’s first language acquisitions. Radford (2016) claims that when a child realizes the language people around her speak has the positive value for the head-initial parameter, she sets the head-initial parameter in her brain as positive. Once this head-first parameter has been set as positive in her brain, in all the phrase she produces, heads precede their complements. Thus, acquisition of the grammar of your first language is just setting parameter (Chomsky 1995). Biologically, human beings have Universal Grammar. Small children are just setting the values of the parameters of Universal Grammar. Linguists call this hypothesis of first language acquisition the innate hypothesis. The innate hypothesis has been severely attached by cognitive grammarians such as Joan Bybee. I would like to prove the validity of the innate hypothesis in a later section. (I have already written about a language creation case in Nicaragua. See the below blog post for the language creation case in Nicaragua.)

Human languages seem to have other parameters. For example, the value of the wh-initial parameter tells us whether a language moves all the question phrases (such as who, when and what) to the initial position of clauses. The value of the null subject-parameter governs whether a language allows null subjects (like Spanish and Italian) or not (like English).

Thus far, we have made the VP read the book. We need to add tense to this VP. Thus, the VP read the book is merged with a T (tense) will to form a larger phrase will read the book. We would like to call the resulting phrase a TP. However, we cannot do so. The phrase will read the book is somehow incomplete.

(7) (a) *will read the book.

(b) I will read the book.

For example, the attained phrase *will read the book is ungrammatical when used as an independent phrase. (In a diary style, 7a may be acceptable.) We need a subject. A problem is that a subject is neither a head nor a complement. A subject is merged as a third category, namely, a specifier. Ray Jackendoff (1977) put forward the idea of specifiers.

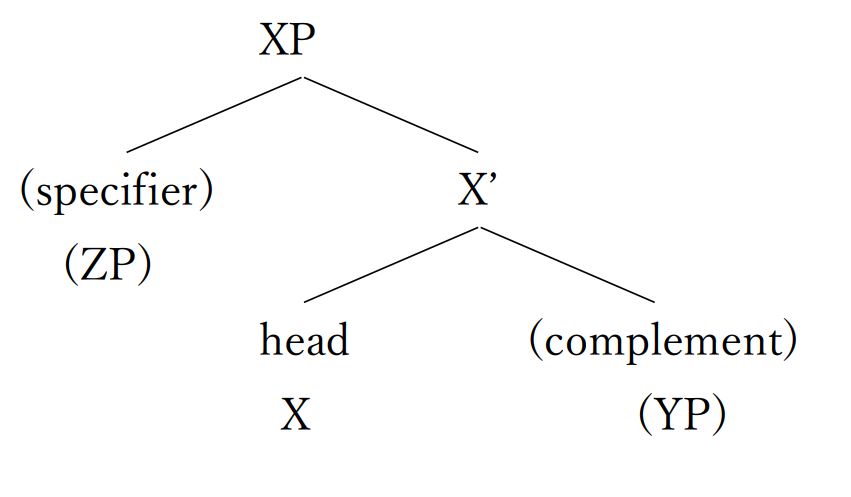

Ray Jackendoff wrote a book titled X-bar syntax—A Study of Phrase Structure in 1977. Jackendoff claims that the internal structure of a phrase can be diagramed as the following tree diagram show. Here, I slightly modify Jackendoff’s (1977) original analysis so that it will be compatible with Chomsky’s (1995) minimalist approach.

(8) The internal structure of a phrase

The tree diagram (8) shows that the head X and its complement YP merges to form the X-bar. (X-bar is sometimes written as X’.) This X-bar merges with the specifier (YP) to form the full phrase XP. Linguists call Jackendoff’s analysis the X-bar theory. Jackendff (1977) thought that the grammatical feature of the head X is projected upward through the X-bar to the XP. Thus, he called the X-bar an intermediate projection and the XP an maximal projection. According to Jackendoff, the head X itself is a minimal projection. The X-bar is called the intermediate projection because the X-bar is larger than the head X but smaller that the maximal projection XP. The bar notation (X-bar/ X’) was thought up to indicate the X-bar is larger than the head X. Keep in mind that the specifier and complement are optional. When the phrase lacks the specifier, the head X and its complement YP merge to form a maximal projection, namely, XP. Thus, in this case, there is no intermediate projection (X-bar). When the phrase lacks both specifier and complement, the head is the maximal projection.

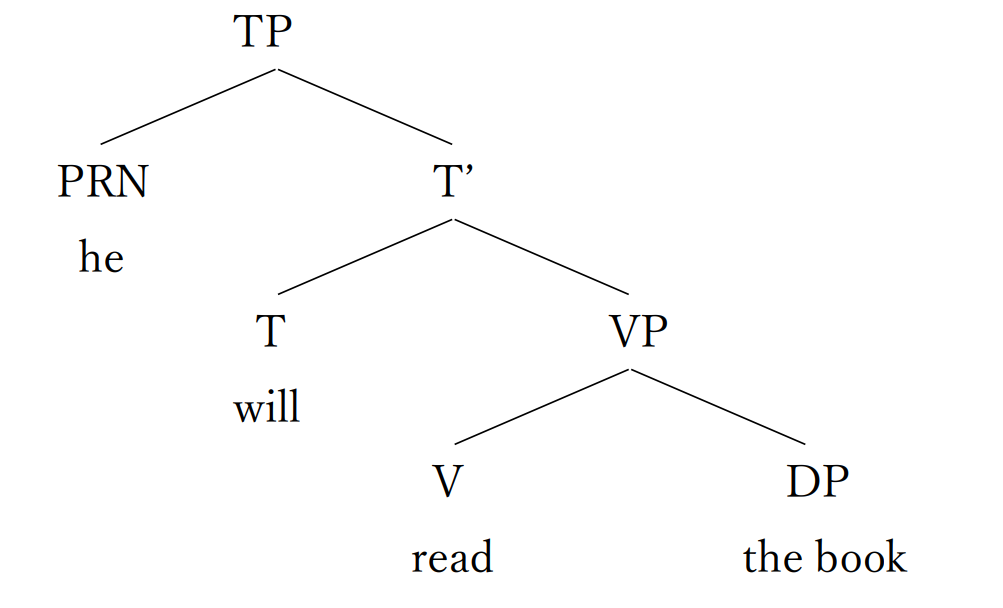

Adopting Jackendoff’s X-bar theory, we analyze the incomplete phrase will read the book as a T-bar. We merge this T-bar with the pronoun he to form a complete TP. This pronoun he is merged as the specifier of the TP. The tree diagram in (9) shows the internal structure of the TP.

(9) The internal structure of the TP he will read the book

Thus far, we have made the TP he will read the book. Although this TP seems to be a complete clause (sentence), it is not. According to Chomsky (1981. 1986, 2021), Radford (1988, 2004, 2009, 2016), Roberts (2007, 2021) and many other researchers, a clause needs a complementizer phrase (CP). A complementizer is difficult to define. To make the explanation simple, I define a complementizer as a head which takes a TP as its complement. In English, overt complementizers such as that in (10a) and whether in (10e) appear in embedded clauses. In (10a-e) examples, the embedded clauses are indicated by outer brackets. The heads of CPs are bold faced. The specifiers of CPs are underlined. (The underlines have disappeared because Word Press does not allow underlines.) The tree diagrams in (11) show internal structures of the embedded clauses of (10a-c).

(10) (a) I know [CP [C that] he will read the book]

(b) I know [CP [C ø] he will read the book]

(c) I do not know [CP what [C ø] he has got in his pocket]

(d) I cannot forget [CP what a great time [C ø] I had]

(e) I do not know [CP [C whether] he is guilty or not]

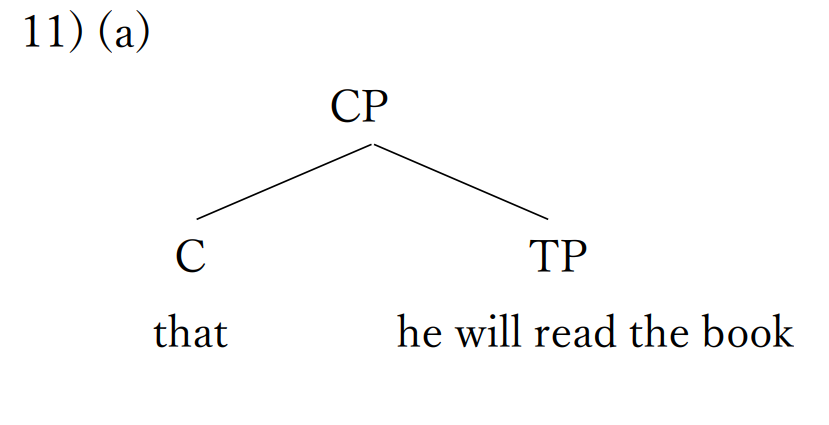

(11) (a)

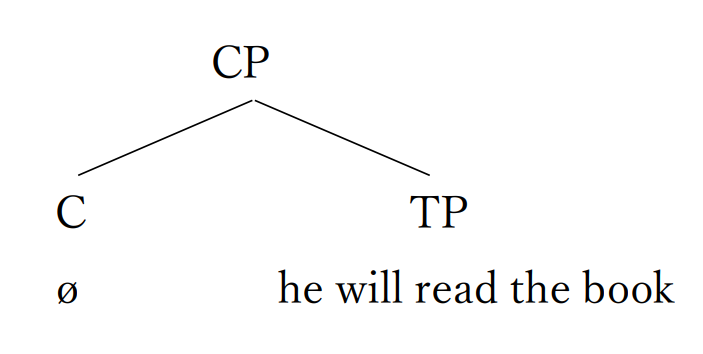

(b)

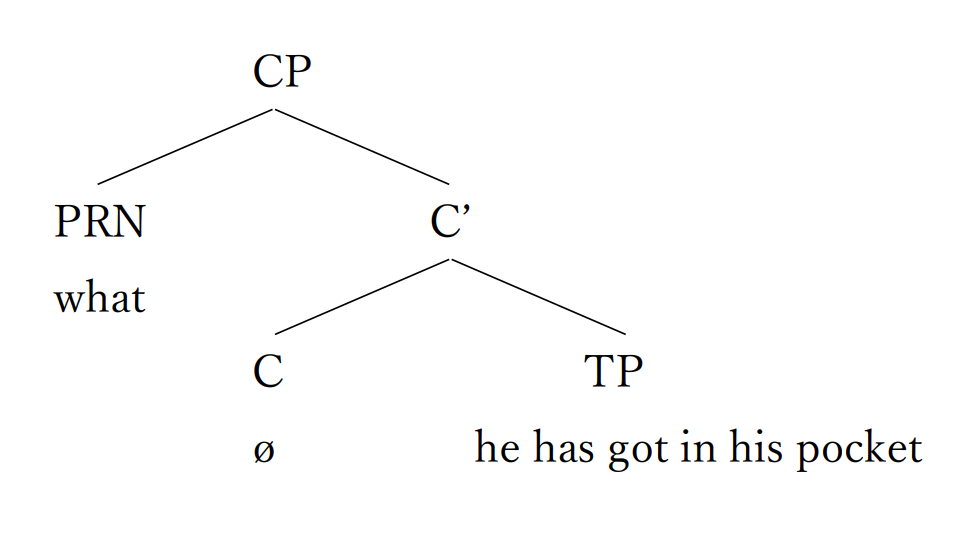

(c)

We interpret the embedded clause in (10a) [CP [C that] he will read the book] as a declarative clause because the embedded clause has the declarative complementizer that in the head of the CP position. We interpret the embedded clause in (10c) [CP what [C ø] he has got in his pocket] as a wh-question clause because the embedded clause has a wh-question word what in the specifier of the CP position. This wh-question word acts as a wh-question operator. An operator has a function to change the meaning of a clause. Generative grammarian believe human language have wh-question operators, yes-no question operators such as whether in (10e), conditional operators like if, relative clause operators, negative operators and so on (Radford 2016). Keep in mind that the embedded clause in (10c) has a null complementizer as the head of the CP. A null constituent has no phonological forms, which means that the null constituent is not pronounced but has a syntactic function. This null complementizer in (10c) seems to not affect the interpretation of the embedded clause. The embedded clause in (10d) [CP what a great time [C ø] I had] has an exclamatory phrase what a great time in the specifier of the CP position. Since the embedded clause in (10d) has this exclamatory phrase in the specifier of CP position, the embedded clause is interpreted as an exclamatory clause. The null complementizer in (10d) also does not affect the interpretation of the clause. In contrast to (10cd), the embedded clause in (10b) [CP [C ø] he will read the book] has no specifier in the CP layer. The CP in (10b) has only the null complementize ø as its head. In this case, the embedded clause (10b) is interpreted as a declarative clause. According to Radford (2016), a clause is interpreted as declarative by default when the CP of the clause lacks a specifier but has a null complementizer as the head of the CP. Since the embedded clause in (10e) [CP [C whether] he is guilty or not]] has the yes-no question operator whether as the head of the CP, the embedded clause is interpreted as a yes-no interrogative clause.

As the above (10) examples show, we seem to judge the type of a clause by checking the head and the specifier of the clause’s CP. Linguists call the head and the specifier of a phrase the edge of the phrase. Thus, as Radford (2016) claims, human language seem to have the following condition for judging the type of a clause. (Noam Chomsky 1995 put forward the idea of the clause typing condition. Andrew Radford 2016 modified Chomsky’s idea.

(12) Clause Typing Condition

We judge the type of a clause (i.e. whether it is declarative, wh-question, yes-no question, conditional or exclamatory etc.) by checking the edge of the clause’s CP.

The clause typing condition (12) means that every clause is a CP. Even root clauses are CPs. Thus, the TP in (9) he will read the book is incomplete as a clause. We interpret the example in (9) as a declarative clause. Thus, the example in (9) must be a CP, not a TP. We merge a declarative null complementizer ø with the TP to form a CP ø he will read the book. Since the CP has the null C as its head, we interpret the clause as declarative by default (Radford 2016).

Sections 3-4 are available from the below link.

References)

Ando, S (2005) Gendai Eibunnpou Kougi [Lectures on Modern English Grammar]. Tokyo: Kaitakusha.

Brinton, L. J and Arnovick L. K. (2017) The English Language – A linguistic History. 3rd edition. Oxford: Oxford University Press.

Bybee, J. (2010) Language, Usage and Cognition. Cambridge: Cambridge University Press.

Chomsky, N. (1955/1975) The Logical Structure of Linguistic Theory. New York: Plenum.

Chomsky, N. (1957) Syntactic Structures. Leiden: Mouton & Co.

Chomsky, N. (1980) “On Binding.” Linguistic Inquiry. Vol. 11. No.1. Winter, 1980. pp. 1-46.

Chomsky, N. (1981) Lectures on Government and Binding – The Pisa Lecture. Hague:

Mouton de Gruyter. (origionally published by Foris Publications)

Chomsky, N. (1986) Barriers. Cambridge, MA: MIT Press.

Chomsky, N. (1995) The Minimalist Program. Cambridge, MA: MIT Press.

Chomsky, N. (1998) Minimalist Inquiries: The Framework, in Martin, R., Michhaels, D. and Uriagereka, J. (eds.) (2000) Step by Step, Essays on Minimalism in Honor of Howard Lasnik. Cambridge, MA: MIT Press.

Chomsky, N. (2001) “Derivation by Phrase.” In M. Kenstowics, ed., Ken Hale: A life in language. Cambridge, MA: MIT Press. 1-52.

Chomsky, N. (2004) “Beyond Explanatory Adaquacy.” In Belleti, A. ed. (2004) Structure and Beyond—The Cartography of Syntactic Structure. Vol.3. Oxford: Oxford University Press.

Chomsky, N. (2005) “Three Factors in Language Design.” Linguistic Inquiry, Vol. 36, Number 1, Winter 2005: 1-22.

Chomsky, N. (2008) “On Phases.” In Freiden, R. Otero, C. P. and Zubizarreta, M. L. eds. (2008) Foundational Issues in Linguistic Theory—Essays in Honor of Jean-Roger Vergnaud. Cambridge, MA: MIT Press. 133-166.

Chomsky, N. (2021) Lecture by Noam Chomsky “Minimalism: where we are now, and where we are going” Lecture at 161st meeting of Linguistic Society of Japan by Noam Chomsky “Minimalism: where we are now, and where we are going.” YouTube. https://www.youtube.com/watch?v=X4F9NSVVVuw&t=4738s accessed on 13th May 2022.

Chomsky, N. and Lasnik, H. (1977) “Filters and Control.” Linguistic Inquiry 8: 425-504.

Cinque, G. (2020) The Syntax of Relative clause—A Unified Analysis. Cambridge: Cambridge University Press.

Donati, C. and Cecchetto, C. (2011) “Relabeling Heads: A Unified Account for Relativization Structures.” Linguistic Inquiry, Vol. 42, Number 4, Fall 2011: 519-560.

Greenberg, G. H. (1963) “Some Universals of Grammar with Particular Reference to the Order of Meaningful Elements”. In Denning, K. and Kemmer, S. (eds.) (1990) Selected Writing of Joseph H. Greenberg. Stanford: Stanford University Press. pp. 40-70.

Inoue, K. (2006) “Case (with Special Reference to Japanese).” in in Everaert, M. and van van Riemsdijk, H.C. (eds.) The Blackwell Companion to Syntax. vol. 1, Oxford: Blackwell Publishing. pp. 295-373.

Jackendoff, R. (1977) syntax—A Study of Phrase Structure. Cambridge, Massachusetts: MIT Press.

Kegl, J., Senghas, A. and Coppola, M. (1999) “Creation through Contact: Sign Language Emergence and Sign Language Change in Nicaragua.” in DeGraff, M. (eds.) (1999) Language Creation and Language Change—Creolization, Diachrony, and Development. Cambridge, Massachusetts: MIT Press. 177-237.

Langacker, R. W. (1991) Foundation of Cognitive Grammar– Descriptive Application. Vol 2 Stanford: Stanford University Press.

Radford, A. (1988) Transformational Grammar – A First course. Cambridge: Cambridge University Press.

Radford, A. (2004) Minimalist Syntax. Cambridge: Cambridge University Press.

Radford, A. (2009) Analyzing English Sentences – a Minimalist Approach. Cambridge: Cambridge University Press.

Radford, A. (2016) Analyzing English Sentences. 2nd edition. Cambridge: Cambridge University Press.

Rizzi, L. (2009) “Movements and Concepts of Locality.” in Piattelli-Palmarini, M., Uriagereka, J. and Salaburu, P. (eds.) (2009) Of Minds and Language—A Dialogue with Noam Chomsky in Basque Country. Oxford: Oxford University Press.

Roberts, I. (2007) Diachronic Syntax. Cambridge: Cambridge University Press.

Roberts, I. (2021) Diachronic Syntax. 2nd edition. Cambridge: Cambridge University Press.

Sheehan, M., Biberauer, T., Roberts, I. and Holmberg, A. (2017) The Final-Over-Final Condition—A Syntactic University. Cambridge, MA: MIT Press.

Further Reading

Beginners of generative grammar should read textbooks such as Radford (1988, 2009, 2016).

More advanced students should consult first literatures such as Chomsky (1981, 1995, 2001, 2004, 2008).

For those who are interested in language changes from generative perspective, I recommend Roberts (2021).

4件のコメント

コメントは受け付けていません。